The use of online surveys in contemporary social science research has grown rapidly due to their many benefits such as cost-effectiveness and ability to yield insights into attitudes, experiences, and perceptions. Unlike more established methods such as pen-and-paper surveys, they enable complex setups like experimental designs and seamless integration of digital media content. But despite their user-friendliness, even seasoned researchers still face numerous challenges in creating online surveys. To showcase the versatility and common pitfalls of online surveying, Martin Emmer, Christian Strippel, and Roland Toth of the Methods Lab arranged the workshop Introduction to Online Surveys on February 22, 2024.

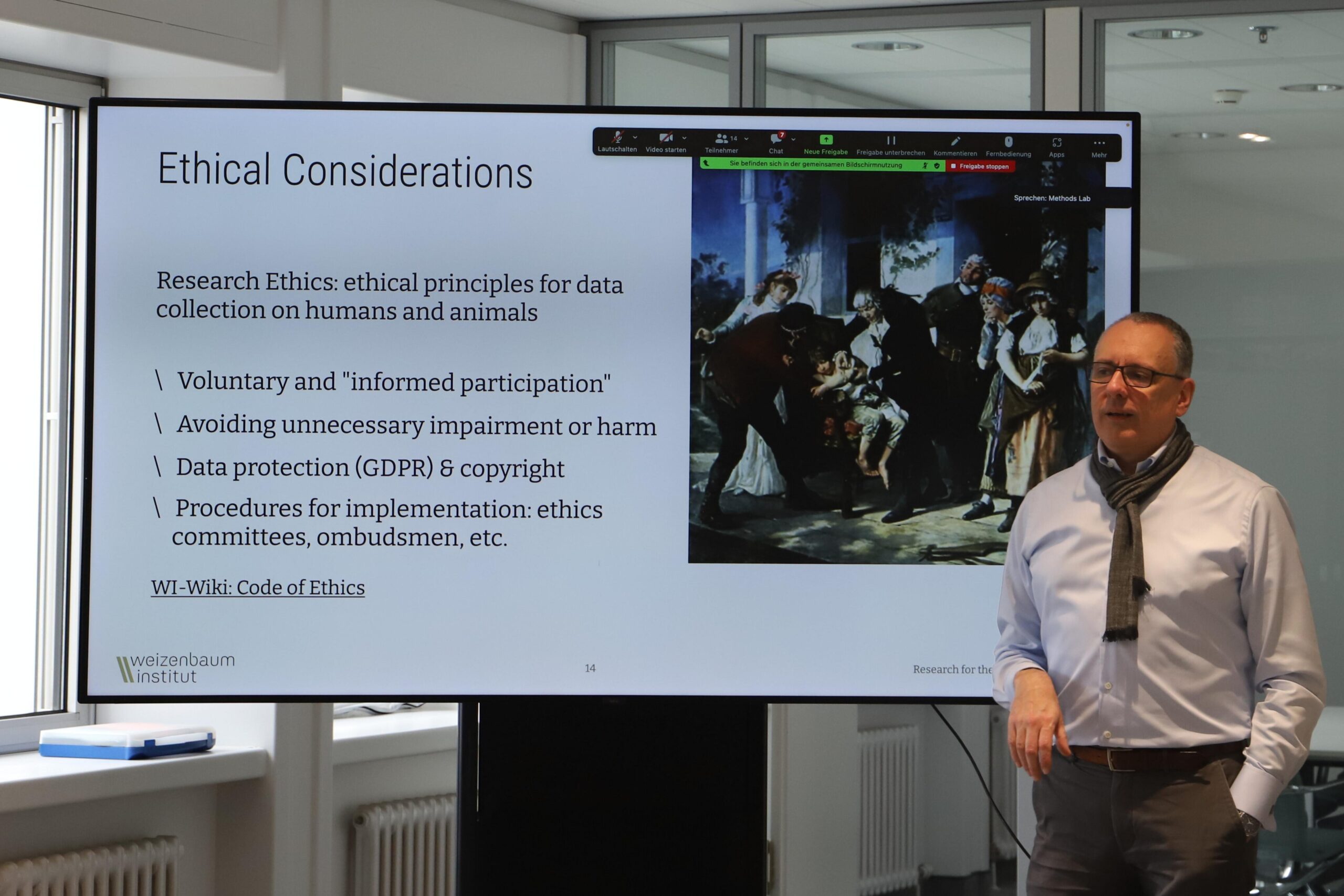

In the first segment, Martin Emmer provided a theoretical overview of the design and logic of online surveys. He started by outlining the common challenges and benefits associated with interviewing, with a particular emphasis on social-psychological dynamics. Compared to online surveys, face-to-face interviews offer a more personal, engaging, and interactive experience, enabling interviewers to adjust questions and seek clarification of answers in real time. However, they can be time-consuming and expensive and may introduce biases such as the interviewer effect. On the other hand, the process of conducting online surveys presents its own set of challenges, such as limited control over the interview environment, a low drop-out threshold, and particularities connected with self-administration such as the need for detailed text-based instructions for respondents. Nevertheless, self-administered and computer-administered surveys boast numerous advantages, including cost-effectiveness, rapid data collection, the easy application of visuals and other stimuli, and accessibility to large and geographically dispersed populations. When designing an online survey, Martin stressed the importance of clear question wording, ethical considerations, and robust procedures to ensure voluntary participation and data protection.

In the second part of the workshop, Christian Strippel delved into the realm of online access panel providers, including the perks and pitfalls associated with utilizing them in survey creation. Panel providers serve as curated pools of potential survey participants managed by institutions, such as Bilendi/Respondi, YouGov, Cint, Civey, and the GESIS Panel. Panel providers oversee the recruitment and management processes, ensuring participants are matched with surveys relevant to their demographics and interests, while also handling survey distribution and data collection. While the use of online panels offers advantages such as accessing a broad participant pool, cost-efficiency, and streamlined sampling of specific sub-groups, they also have their limitations. Online panels are, for example, not entirely representative of the general population as they exclude non-internet users. Moreover, challenges arise from professional respondents such as so-called speeders who rush through surveys, and straight-liners who consistently choose the same response in matrix questions. Strategies to combat these issues include attention checks throughout the questionnaire, systematic exclusion of speeders and straight-liners, and quota-based screening. To conclude, Christian outlined what constitutes a good online panel provider, and shared valuable insights into how to plan a survey using one effectively.

The third and final segment of the workshop featured a live demonstration by Roland Toth on how to set up an online survey using the open-source software LimeSurvey, which is hosted on the institute’s own servers. During this live demonstration, he created the very evaluation questionnaire administered to the workshop participants at the end of the workshop. Roland began by providing an overview of the general setup and relevant settings for survey creation. Subsequently, he demonstrated various methods of crafting questions with different scales, display conditions, and the incorporation of visual elements such as images. Throughout the demo, Roland addressed issues raised earlier in the first part of the workshop concerning language and phrasing, emphasizing rules for question-wording and why it is important to ask for one piece of information only per question. The live demonstration was wrapped up with a segment on viewing and exporting collected data. After letting the participants complete the evaluation form, the workshop concluded with a Q&A session.