On November 30th, 2023, the Methods Lab organized a workshop on quantitative text analysis. The workshop was conducted by Douglas Parry (Stellenbosch University) and covered the whole process of text analysis from data preparation to the visualization of sentiments or topics identified.

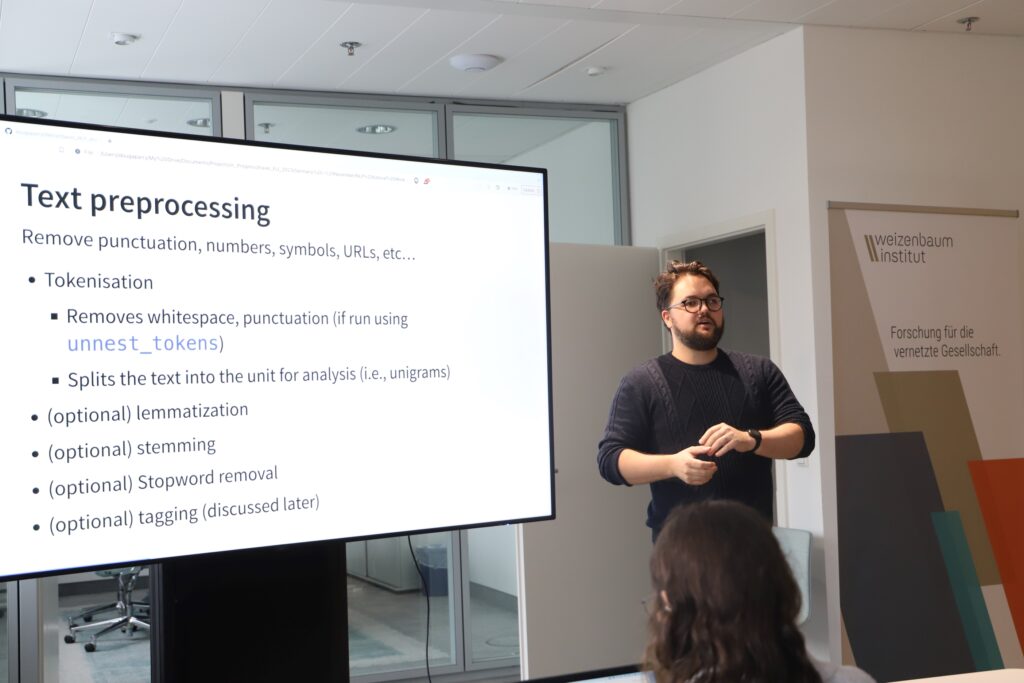

In the first half of the workshop, Douglas covered the first steps involved in text analysis, such as tokenization (the transformation of texts into smaller parts like single words or consecutive words), the removal of “stop words” (words that do not contain meaningful information), and the aggregation of content by meta-information (authors, books, chapters, etc.). Apart from the investigation of the frequency with which terms occur, sentiment analysis using existing dictionaries was also addressed. This technique involves assigning values to each word representing certain targeted characteristics (e.g., emotionality/polarity), which in turn allows for comparing overall sentiments between different corpora. Finally, the visualization of word occurrences and sentiments was covered. After this introduction, participants had the chance to apply their knowledge using the programming language R by solving tasks with texts Douglas provided.

In the second half of the workshop, Douglas focused on different methods of topic modeling, which ultimately attempt to assign texts to latent topics based on the words they contain. In comparison to simpler procedures covered in the first half of the workshop, topic models can also consider the context of words within the texts. Specifically, Douglas introduced participants to Latent Dirichlet Allocation (LDA), Correlated Topic Modeling (CTM), and Structural Topic Modeling (STM). One of the most important decisions to be made for any such model is the number of topics to emerge: too few may dilute nuances within topics and too many may lead to redundancies. The visualization and – most importantly – limitations of topic modeling were also discussed before participants performed topic modeling themselves with the data provided earlier. Finally, Douglas concluded with a summary of everything covered and an overview of advanced subjects in text analysis.

The workshop was very well-received and prepared all participants for text analysis in the future. Douglas balanced lecture-style sections and well-prepared, hands-on application very well and provided all materials in a way that participants could focus on the tasks at hand, while following a logical structure throughout. We would like to thank him for this great introduction to text analysis!